So I move the project over to the iPhone.

Everything works perfectly - no problems with NSLog() or breakpoints flaking out...

What the hell!

Search This Blog

Wednesday, August 31, 2011

Tuesday, August 30, 2011

Misery (Continued)

So I found this link. A way to reset the debugger to some degree:

1. Close XCode

2. Delete the $project/build/*

3. Delete /Developer/Platforms/iPhoneOS.platform/DeviceSupport/4.2.1

4. Restart XCode

5. Go to organizer and agree to let it download "collect" what it wants (symbols) from the device

6. In Organizer, again right click -> Add device to provisioning portal

Doing this I now have a project which ignores breakpoints and/or NSLog statements about 10% or so of the time - which is almost tolerable. I set a breakpoint at the start of the project - if it doesn't stop I just restart it.

Its really hard to imagine what these folders have to do with NSLog and breakpoints...

1. Close XCode

2. Delete the $project/build/*

3. Delete /Developer/Platforms/iPhoneOS.platform/DeviceSupport/4.2.1

4. Restart XCode

5. Go to organizer and agree to let it download "collect" what it wants (symbols) from the device

6. In Organizer, again right click -> Add device to provisioning portal

Doing this I now have a project which ignores breakpoints and/or NSLog statements about 10% or so of the time - which is almost tolerable. I set a breakpoint at the start of the project - if it doesn't stop I just restart it.

Its really hard to imagine what these folders have to do with NSLog and breakpoints...

Misery (Continued)

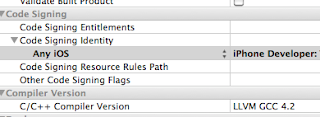

So the best I can do to fix the NSLog() and breakpoint problem on this particular project (or a project using the same file, i.e., another independently constructed duplicate project) is to diddle the Code Signing settings for "Any iOS" between "iPhone Developer" and "iPhone Developer: me XXXXXX".

Good news on the audio front...

It turns out that when you instantiate an AudioUnit the settings of "[ AVAudioSession sharedInstance] setCategory:" matter.

For example, with the category set to "AVAudioSessionCategoryPlayback" you can instantiate an AudioUnit with input but the render loop will not be called. Changing the category to "AVAudioSessionCategoryPlayAndRecord" after instantiation does not change this - even if you stop and restart the AudioUnit.

So if you don't use the Record category your render loop will simply not be called.

I have been looking around at various documentation for AudioUnits wonder what you are allowed to do and not do with multiple AudioUnits simultaneously. Certainly it seems you should be able to have more than one at any given time and in fact as long as they don't overlap on input and output and you have the right category set on instantiation this seems to be true from my experiments.

But there is little documentation of this - at least I could not find it.

AudioUnits and AVAudioPlayer do not work well together either. However, I probably did not have the categories set properly so this might be part of the cause though some other testing leads me to believe there are other incompatibilities.

Basically I eliminated AVAudioPlayer as well as some other MixerHostAudio code I was using. I now do my own mixing and sound triggering in a pair of AudioUnits and it seems far simpler and more efficient.

Good news on the audio front...

It turns out that when you instantiate an AudioUnit the settings of "[ AVAudioSession sharedInstance] setCategory:" matter.

For example, with the category set to "AVAudioSessionCategoryPlayback" you can instantiate an AudioUnit with input but the render loop will not be called. Changing the category to "AVAudioSessionCategoryPlayAndRecord" after instantiation does not change this - even if you stop and restart the AudioUnit.

So if you don't use the Record category your render loop will simply not be called.

I have been looking around at various documentation for AudioUnits wonder what you are allowed to do and not do with multiple AudioUnits simultaneously. Certainly it seems you should be able to have more than one at any given time and in fact as long as they don't overlap on input and output and you have the right category set on instantiation this seems to be true from my experiments.

But there is little documentation of this - at least I could not find it.

AudioUnits and AVAudioPlayer do not work well together either. However, I probably did not have the categories set properly so this might be part of the cause though some other testing leads me to believe there are other incompatibilities.

Basically I eliminated AVAudioPlayer as well as some other MixerHostAudio code I was using. I now do my own mixing and sound triggering in a pair of AudioUnits and it seems far simpler and more efficient.

Monday, August 29, 2011

Misery (Continued)

So I have still not been able to figure out completely what's going on with the NSLog() and breakpoints.

I tried Googling this 'xcode gdb "sharedlibrary apply-load-rules all" ' where I did find this post (here) on various "code signing" issues.

It seems like the gdb debugger does not always attach to the remote iOS process unless some voodoo in the "code signing" section of the "Build" configuration is set to the proper phase of the moon.

Diddling this seems to make NSLog() and breakpoints work again:

I changed the 'Any OS' tag to some default.

This Apple software is totally flaky and unreliable in this regard.

[ UPDATE ] While this worked a few times in a row on XCode 3 no dice in XCode 4.

Diddling the same parameter does not cause NSLog() or breakpoints to work.

[ UPDATE ] I regenerated the provisioning profiles and upgraded a different iPad to 4.3.5 (I was on 4.3.3 for the problems above). After reinstalling all of this it seems to be working ("seems to be working" basically means I have several time connected and disconnect the iPad over an hour or so and each time breakpoints continue to work.

Now that I think about it I probably updated iTunes to 10.4.1 (10) - but I cannot recall if the problems started before that or not - I think not in this case.

Is it fixed?

The jury is still out...

I tried Googling this 'xcode gdb "sharedlibrary apply-load-rules all" ' where I did find this post (here) on various "code signing" issues.

It seems like the gdb debugger does not always attach to the remote iOS process unless some voodoo in the "code signing" section of the "Build" configuration is set to the proper phase of the moon.

Diddling this seems to make NSLog() and breakpoints work again:

I changed the 'Any OS' tag to some default.

This Apple software is totally flaky and unreliable in this regard.

[ UPDATE ] While this worked a few times in a row on XCode 3 no dice in XCode 4.

Diddling the same parameter does not cause NSLog() or breakpoints to work.

[ UPDATE ] I regenerated the provisioning profiles and upgraded a different iPad to 4.3.5 (I was on 4.3.3 for the problems above). After reinstalling all of this it seems to be working ("seems to be working" basically means I have several time connected and disconnect the iPad over an hour or so and each time breakpoints continue to work.

Now that I think about it I probably updated iTunes to 10.4.1 (10) - but I cannot recall if the problems started before that or not - I think not in this case.

Is it fixed?

The jury is still out...

Sunday, August 28, 2011

XCode, AudioUnits, Breakpoints and NSLog Misery...

So I am debugging an iOS AudioUnit render callback function.

I have the exact same code working in a standalone project so I know that everything is correct. The code in question looks something like this:

OSStatus recordingCallback(void *inRefCon,

AudioUnitRenderActionFlags * ioActionFlags,

const AudioTimeStamp *inTimeStamp,

UInt32 inBusNumber,

UInt32 inNumberFrames,

AudioBufferList *ioData)

{

AudioBuffer buffer;

AudioBufferList bufferList;

...

buffer.mNumberChannels = 1;

buffer.mDataByteSize = inNumberFrames * 2;

buffer.mData = malloc( inNumberFrames * 2 );

bufferList.mNumberBuffers = 1;

bufferList.mBuffers[0] = buffer;

...

status = AudioUnitRender(audioUnit,

ioActionFlags,

inTimeStamp,

inBusNumber,

inNumberFrames,

&bufferList);

if (status != noErr)

{

NSLog(@"An AudioUnitRender error occurred (%d).", status);

}

...

}

The buffer arrangement is such that only a single buffer is being used.

In the stand alone project status is always equal to noErr and the NSLog function is never called and the render works fine.

When I copy this code without change into the main project suddenly all hell breaks loose.

First off, status starts reliably returning -50 (which indicates a bad parameter is being passed to AudioUnitRender).

Bad parameter?

How on earth can that be as the exact same parameters work perfectly in the standalone project. After endless diddling to ensure that I am not doing something stupid I start to look for other reasons this might be failing.

Eventually I discover a post on some Apple developer list where someone else has a similar problem. They trace this to the use of AVAudioPlayer and its associated start method. (Basically this means if you have some other part of the project playing background music the above code ceases to work correctly and indicates bad parameters are being passed.)

Commenting out this code (the AVAudioPlayer code along with some MixerHostAudio-derived code) appears to make the project work but I notice that I am no longer seeing NSLog output (the project barfs out all kinds of status as it boots up). Then breakpoints seem to no longer work so I cannot tell if removing the use of AVAudioPlayer is really working or not.

Now I become extremely frustrated.

How can NSLog simply cease to work?

So I think - well, this must be an XCode 4 problem...

I open the project in the latest version of XCode 3 and NSLog does not work there either nor do breakpoints...!!!

What the hell? I curse Apple, Jobs, and the iOS development staff.

(This is made worse by recently discovering that Adobe CS 2 will not work on Lion, Lion being required to have the newest XCode 4 and no doubt iOS 5. I want a law passed that requires Apple to compensate me for taking away functionality with required software releases...)

Now what?

More hours of Googling and diddling until if find this on StackOverflow (with some changes):

1. Quit XCode 3/4.

2. Open a terminal window and cd to the project directory

3. cd into the .xcodeproj directory

4. Delete everything except the .pbxproj file.

Or, in Finder Right/Option-Clicking on the .xcodeproj bundle, pick "Show Package Contents," and remove all but the .pbxproj file.

This seems to work - at least some of the time and sort of magically the breakpoints and NSLog output reappear and work as expected!

But then it flakes out again - sometimes working - sometime not.

I guess at this point the only things I can come up with are either

A) Somehow the render function is messing things up. Everything works save for the microphone callback just fine - or at least it did - no flakies until I added in that extra AudioUnit code. It seems as if this addition permanently wacked out XCode4 for this project (others at least appear to still work correctly including the standalone version of the AudioUnit.

B) Mixing to much AudioUnit stuff is a problem or flakey... Others report the AVAudioPlayer problem with AudioUnit and other things (like playing movies).

More to come...

I have the exact same code working in a standalone project so I know that everything is correct. The code in question looks something like this:

OSStatus recordingCallback(void *inRefCon,

AudioUnitRenderActionFlags * ioActionFlags,

const AudioTimeStamp *inTimeStamp,

UInt32 inBusNumber,

UInt32 inNumberFrames,

AudioBufferList *ioData)

{

AudioBuffer buffer;

AudioBufferList bufferList;

...

buffer.mNumberChannels = 1;

buffer.mDataByteSize = inNumberFrames * 2;

buffer.mData = malloc( inNumberFrames * 2 );

bufferList.mNumberBuffers = 1;

bufferList.mBuffers[0] = buffer;

...

status = AudioUnitRender(audioUnit,

ioActionFlags,

inTimeStamp,

inBusNumber,

inNumberFrames,

&bufferList);

if (status != noErr)

{

NSLog(@"An AudioUnitRender error occurred (%d).", status);

}

...

}

The buffer arrangement is such that only a single buffer is being used.

In the stand alone project status is always equal to noErr and the NSLog function is never called and the render works fine.

When I copy this code without change into the main project suddenly all hell breaks loose.

First off, status starts reliably returning -50 (which indicates a bad parameter is being passed to AudioUnitRender).

Bad parameter?

How on earth can that be as the exact same parameters work perfectly in the standalone project. After endless diddling to ensure that I am not doing something stupid I start to look for other reasons this might be failing.

Eventually I discover a post on some Apple developer list where someone else has a similar problem. They trace this to the use of AVAudioPlayer and its associated start method. (Basically this means if you have some other part of the project playing background music the above code ceases to work correctly and indicates bad parameters are being passed.)

Commenting out this code (the AVAudioPlayer code along with some MixerHostAudio-derived code) appears to make the project work but I notice that I am no longer seeing NSLog output (the project barfs out all kinds of status as it boots up). Then breakpoints seem to no longer work so I cannot tell if removing the use of AVAudioPlayer is really working or not.

Now I become extremely frustrated.

How can NSLog simply cease to work?

So I think - well, this must be an XCode 4 problem...

I open the project in the latest version of XCode 3 and NSLog does not work there either nor do breakpoints...!!!

What the hell? I curse Apple, Jobs, and the iOS development staff.

(This is made worse by recently discovering that Adobe CS 2 will not work on Lion, Lion being required to have the newest XCode 4 and no doubt iOS 5. I want a law passed that requires Apple to compensate me for taking away functionality with required software releases...)

Now what?

More hours of Googling and diddling until if find this on StackOverflow (with some changes):

1. Quit XCode 3/4.

2. Open a terminal window and cd to the project directory

3. cd into the .xcodeproj directory

4. Delete everything except the .pbxproj file.

Or, in Finder Right/Option-Clicking on the .xcodeproj bundle, pick "Show Package Contents," and remove all but the .pbxproj file.

This seems to work - at least some of the time and sort of magically the breakpoints and NSLog output reappear and work as expected!

But then it flakes out again - sometimes working - sometime not.

I guess at this point the only things I can come up with are either

A) Somehow the render function is messing things up. Everything works save for the microphone callback just fine - or at least it did - no flakies until I added in that extra AudioUnit code. It seems as if this addition permanently wacked out XCode4 for this project (others at least appear to still work correctly including the standalone version of the AudioUnit.

B) Mixing to much AudioUnit stuff is a problem or flakey... Others report the AVAudioPlayer problem with AudioUnit and other things (like playing movies).

More to come...

Thursday, August 25, 2011

iOS 4, Voip

I have a VOIP application. It runs in the background as per the Apple docs.

On thing is interesting. In the handler for my input and output streams I have this code:

if ([ UIApplication sharedApplication ].applicationState == UIApplicationStateActive)

{

NSLog(@"APPSTATE: UIApplicationStateActive");

}

else if ([ UIApplication sharedApplication ].applicationState == UIApplicationStateInactive)

{

NSLog(@"APPSTATE: UIApplicationStateInactive");

}

else if ([ UIApplication sharedApplication ].applicationState == UIApplicationStateInactive)

{

NSLog(@"APPSTATE: UIApplicationStateInactive");

}

else

{

NSLog(@"APPSTATE: Unknown: %d", [ UIApplication sharedApplication ].applicationState);

}

According to the headers the only allowable values for "applicationState" are UIApplicationStateActive, UIApplicationStateInactive, and UIApplicationStateBackground.

So when my app is in background with TCP/IP running it prints out

APPSTATE: Unknown: 2

So it seems like the background VOIP state is 2. An undocumented state at that!

On thing is interesting. In the handler for my input and output streams I have this code:

if ([ UIApplication sharedApplication ].applicationState == UIApplicationStateActive)

{

NSLog(@"APPSTATE: UIApplicationStateActive");

}

else if ([ UIApplication sharedApplication ].applicationState == UIApplicationStateInactive)

{

NSLog(@"APPSTATE: UIApplicationStateInactive");

}

else if ([ UIApplication sharedApplication ].applicationState == UIApplicationStateInactive)

{

NSLog(@"APPSTATE: UIApplicationStateInactive");

}

else

{

NSLog(@"APPSTATE: Unknown: %d", [ UIApplication sharedApplication ].applicationState);

}

According to the headers the only allowable values for "applicationState" are UIApplicationStateActive, UIApplicationStateInactive, and UIApplicationStateBackground.

So when my app is in background with TCP/IP running it prints out

APPSTATE: Unknown: 2

So it seems like the background VOIP state is 2. An undocumented state at that!

Monday, August 15, 2011

MIDI Polyphony (Part 1)

In working on the 3i controller my experience with the iConnectMIDI has led me down an interesting path.

One of the things the 3i needs to be able to do is detect "movement nuance" and to transmit information to an associated sampler or synth about what to do with that movement nuance.

I define "movement nuance" to be a continuously variable parameter that is associated with a given playing note. MIDI Aftertouch is an example of this - typically used to determine how much "pressure" is being used to hold down a key after a note is sounded, i.e., once the piano key is down I can push "harder" to generate a different Aftertouch value.

MIDI Pitchbend is similar as well except that it affects all the notes, not just the one you are currently touching.

Normal MIDI keyboards are "note polyphonic" which means that you can play a chord. But the "effects" that MIDI allows in terms of "movement nuance" are limited.

Traditionally MIDI is used with keyboards and to a degree with wind instruments where its limited ability to handle "nuance movement" is not really an issue or where things like Aftertouch is sufficient to deal with whatever the musician is trying to do.

But if you think about other kinds of instruments, for example string instruments, then there is a lot more nuance associated with playing the note. Some examples of this include "hammer on" and "hammer off", "bending" as on a guitar or violin, sliding "into a note" on a violin, the notion that some other element such as a bow is sounding a note at one pitch and the pitch now needs to change, and so on.

Then there is the effect after releasing the note, e.g., "plucking" a string or sounding a piano note with the damper up. The note sounds in response to the initial note event. The event is release (MIDI Note Off) but the note still sounds.

While this is fine on a piano its much different on a stringed instrument. For something like a violin you may be holding down a string which is plucked. After the pluck is released you may wish to slide your finger that's holding the note to change pitch.

Here MIDI does not off significant help.

The notion of a note sounding beyond your interaction with a keyboard is also very non-MIDI. MIDI is designed around a basic "note on"/"note off" model where everything else revolves around that.

Certainly its possible to try and "extend" MIDI with a notion of CC values or other tricks in order to get more information per note - but MIDI wasn't really designed for that. (Some MIDI guitars run one note per channel - but that's about all the innovation they can muster.)

From my perspective with the 3i really the only thing left is to reconsider the whole notion of what a MIDI keyboard really is.

So my idea is that, in a very general sense, when you touch a playing surface you create a sort of MIDI "virtual channel" that is associated with whatever that touch (and corresponding sound) is supposed to do. That "virtual channel" lives on through the sounding of the note through whatever "note on" activities are required, through the "note off" and on through any sort of "movement nuance" that continues after the "note off" events, e.g., plucking a violin note on E and sliding your finger up to E#.

This model gives the controller a full MIDI channel to interact with a synth or sampler for the entire duration (life time) of the "note event" - which I define as lasting from the initial note sounding through note off through the "final silence".

So instead of having a rack of virtual instruments for an entire "keyboard" you now have the idea of a full MIDI channel of virtual instruments for a single "touch" that lasts the lifetime of a note. The virtual rack being conjured up at the initiation of the sound and being "run" until "final silence" - at which point the virtual rack is recycled for another note.

Each "virtual rack" associated with a note has to last as long as the note is sounding.

This allows a controller to manipulate MIDI data on its "virtual channel" as the life of the note progresses.

Now, since a note can last a long time (seconds) and the player can continue to pluck or play other notes you find that you can quickly run out of channels on a standard 16 channel MIDI setup because a MIDI channel can be tied up with that note for quite a while (also seconds) - particularly when you factor in having two different instruments (say bass and guitar) set up (as a split) or when you have layers.

This is effectively MIDI polyphony in the sense that you need to figure out the maximum number of channels that could be active at a given time. On "hardwired" synths or samplers this is typically something like 64 or 128 or more. (Of course they are managing simultaneous sound samples, not MIDI channels. But in my case we need MIDI via the 3i to do the job of the hardware sampler so we need MIDI channels instead of (or to act as) "voices".)

Which leave us with 16 MIDI channels is simply not enough.

And this means that using a MIDI device to move data from the 3i to the synth or sampler is inherently limited because at most it can support only 16 channels.

This if further compounded by the fact that once the MIDI reaches outside the host OS it must be throttled down to 32.5K baud in order to be compliant with the MIDI standard (see this PDF).

I believe this is what I am seeing with iConnectMIDI - as fast as the device is it cannot move data off of an iOS device faster than 32.5K baud because iOS is throttling it.

So the only way to get data off the device is via a high speed USB cable (WiFi being no good for reasons mentioned before).

One of the things the 3i needs to be able to do is detect "movement nuance" and to transmit information to an associated sampler or synth about what to do with that movement nuance.

I define "movement nuance" to be a continuously variable parameter that is associated with a given playing note. MIDI Aftertouch is an example of this - typically used to determine how much "pressure" is being used to hold down a key after a note is sounded, i.e., once the piano key is down I can push "harder" to generate a different Aftertouch value.

MIDI Pitchbend is similar as well except that it affects all the notes, not just the one you are currently touching.

Normal MIDI keyboards are "note polyphonic" which means that you can play a chord. But the "effects" that MIDI allows in terms of "movement nuance" are limited.

Traditionally MIDI is used with keyboards and to a degree with wind instruments where its limited ability to handle "nuance movement" is not really an issue or where things like Aftertouch is sufficient to deal with whatever the musician is trying to do.

But if you think about other kinds of instruments, for example string instruments, then there is a lot more nuance associated with playing the note. Some examples of this include "hammer on" and "hammer off", "bending" as on a guitar or violin, sliding "into a note" on a violin, the notion that some other element such as a bow is sounding a note at one pitch and the pitch now needs to change, and so on.

Then there is the effect after releasing the note, e.g., "plucking" a string or sounding a piano note with the damper up. The note sounds in response to the initial note event. The event is release (MIDI Note Off) but the note still sounds.

While this is fine on a piano its much different on a stringed instrument. For something like a violin you may be holding down a string which is plucked. After the pluck is released you may wish to slide your finger that's holding the note to change pitch.

Here MIDI does not off significant help.

The notion of a note sounding beyond your interaction with a keyboard is also very non-MIDI. MIDI is designed around a basic "note on"/"note off" model where everything else revolves around that.

Certainly its possible to try and "extend" MIDI with a notion of CC values or other tricks in order to get more information per note - but MIDI wasn't really designed for that. (Some MIDI guitars run one note per channel - but that's about all the innovation they can muster.)

From my perspective with the 3i really the only thing left is to reconsider the whole notion of what a MIDI keyboard really is.

So my idea is that, in a very general sense, when you touch a playing surface you create a sort of MIDI "virtual channel" that is associated with whatever that touch (and corresponding sound) is supposed to do. That "virtual channel" lives on through the sounding of the note through whatever "note on" activities are required, through the "note off" and on through any sort of "movement nuance" that continues after the "note off" events, e.g., plucking a violin note on E and sliding your finger up to E#.

This model gives the controller a full MIDI channel to interact with a synth or sampler for the entire duration (life time) of the "note event" - which I define as lasting from the initial note sounding through note off through the "final silence".

So instead of having a rack of virtual instruments for an entire "keyboard" you now have the idea of a full MIDI channel of virtual instruments for a single "touch" that lasts the lifetime of a note. The virtual rack being conjured up at the initiation of the sound and being "run" until "final silence" - at which point the virtual rack is recycled for another note.

Each "virtual rack" associated with a note has to last as long as the note is sounding.

This allows a controller to manipulate MIDI data on its "virtual channel" as the life of the note progresses.

Now, since a note can last a long time (seconds) and the player can continue to pluck or play other notes you find that you can quickly run out of channels on a standard 16 channel MIDI setup because a MIDI channel can be tied up with that note for quite a while (also seconds) - particularly when you factor in having two different instruments (say bass and guitar) set up (as a split) or when you have layers.

This is effectively MIDI polyphony in the sense that you need to figure out the maximum number of channels that could be active at a given time. On "hardwired" synths or samplers this is typically something like 64 or 128 or more. (Of course they are managing simultaneous sound samples, not MIDI channels. But in my case we need MIDI via the 3i to do the job of the hardware sampler so we need MIDI channels instead of (or to act as) "voices".)

Which leave us with 16 MIDI channels is simply not enough.

And this means that using a MIDI device to move data from the 3i to the synth or sampler is inherently limited because at most it can support only 16 channels.

This if further compounded by the fact that once the MIDI reaches outside the host OS it must be throttled down to 32.5K baud in order to be compliant with the MIDI standard (see this PDF).

I believe this is what I am seeing with iConnectMIDI - as fast as the device is it cannot move data off of an iOS device faster than 32.5K baud because iOS is throttling it.

So the only way to get data off the device is via a high speed USB cable (WiFi being no good for reasons mentioned before).

Wednesday, August 3, 2011

Avoiding iOS "Sync in Progress"...

As a developer I am often in a position of connecting an iOS device to my Mac for various reasons.

It is vastly annoying that every time I connect the iPad iTunes tries to sync with it - even if the iPad or iPhone is synced with another computer (and even when you tell iTunes not to bother with this particular iOS device).

So far I have not figured out how to turn this off via iTunes in a suitable way. I want my devices that are ones I use for business to sync when connected - but not devices for development - which might be synced to other Macs. I seem to be able to disable it for devices synched with a given Mac but not with other Macs.

However, you can defeat it by simply being lazy!

Simply wait for a new version if iTunes to show up and then don't launch it.

Each new version of iTunes insists you agree to the vast legalese is presents the first time it runs. However, until you agree (or disagree) no syncing occurs when you attach an iOS device. Thus as long as you don't need to run iTunes on your development machine you can safely ignore it and its legalese and happily connect and disconnect to your hearts content without "Sync in Progress".

It is vastly annoying that every time I connect the iPad iTunes tries to sync with it - even if the iPad or iPhone is synced with another computer (and even when you tell iTunes not to bother with this particular iOS device).

So far I have not figured out how to turn this off via iTunes in a suitable way. I want my devices that are ones I use for business to sync when connected - but not devices for development - which might be synced to other Macs. I seem to be able to disable it for devices synched with a given Mac but not with other Macs.

However, you can defeat it by simply being lazy!

Simply wait for a new version if iTunes to show up and then don't launch it.

Each new version of iTunes insists you agree to the vast legalese is presents the first time it runs. However, until you agree (or disagree) no syncing occurs when you attach an iOS device. Thus as long as you don't need to run iTunes on your development machine you can safely ignore it and its legalese and happily connect and disconnect to your hearts content without "Sync in Progress".

Tuesday, August 2, 2011

The Apple 30-Pin Misery Plan...

I have been struggling to get high-speed MIDI information out of iOS devices. Last time I wrote about how the iConnectMIDI failed to give me what I was looking for (I have an email into their support group but so far no follow up response). Since then - and though I am still playing with that device - I have found another device that I thought would help.

But first I'd like to talk about what I think is going on with iOS and MIDI.

First off, I believe that the ubiquitous 30-pin Apple USB connector is filled with magic. In particular there is a "chip" of some sort that resides on one end or the other of the 30-pin that does a lot of magical things for connecting devices. It is said that there is a patent involved and that anyone wanting to create a cable that is plug-compatible with the iOS devices must use that patented or licensed technology.

There is basically a single USB 2.0 output channel on a given iOS device available via the 30-pin connector. USB is a high-speed (500 Mb) frame-based architecture and requires two pins on the 30-pin (see this). Since MIDI output is basically serial output the 30-pin has to share the USB pins and hence the USB.

So what Apple appears to have done was make the iOS devices "look" for MIDI class-compliant devices on the USB line via the Camera Connection kit, if it finds one then it talks to it.

(Note that the Camera Connection Kit only works on iPad at this time.)

However, the USB coming out of the iOS device does not seem to work in the typical USB-daisy-chain mode where you can have multiple devices on a USB line (at least I have not been able to get it to work that way though more testing is required here). iOS also wants to power the MIDI USB device and, with its battery situation tightly controlled by Apple, the device cannot draw too much power or its rejected.

Next up there is the Apple iOS software talking to the USB port. The USB line is limited by the iOS drivers available. The key is here is "class compliant" - if your MIDI device is "class compliant" and draws a minimum of power - it works. Ditto for various audio interfaces as documented here (I did not try this myself but it makes sense that "class compliant" audio would work as well). So if some USB device is not known to iOS then it simply isn't recognized or processed. Add to this the fact that you cannot load drivers into iOS and you end up quite limited.

So the bottom line is that if you want MIDI out of an iOS device you have to use either 1) a Camera Connection Kit on iPad, 2) an iConnectMIDI on any iOS device, or 3) your own solution.

All this leads to something I had hoped to use to solve this: dockStubz from cableJive.

dockStubz is a 30-pin pass through with a mini USB port on the side. My hope was that MIDI devices connected through the dockStubz via the Camera Connector would work and I could run the debugger.

The device has its own male 30-pin on one end and a female 30-pin on the other.

The mini USB port is on the side.

It connects to the iPhone or iPad and the USB cable works just as if you were using a standard out-of-the-box Apple 30-pin to USB.

It works in XCode just fine. There's only one rub. You cannot plug anything else into the 30-pin and have the USB connection work. So installing the Camera Connection Kit disables and disconnects the mini USB.

Rats! Some much for believing internet posts about debugging MIDI on the iPad.

Though now that I have done my research I can see why it doesn't work. The mini-USB is physically connected to the iOS USB pins on the 30-pin so long as nothing is plugged into the 30-pin. Plugging in another device breaks that connection.

The other thing that seems to be happening is that when a MIDI device is attached there is some form of throttling going on to ensure that the attached MIDI device is not overrun. I found this out when a bug in my code had me dumping MIDI CC messages out in a near-tight-loop.

Running these out via my own USB line happens at full USB speed and no throttling occurs (however I am not going through the iOS MIDI driver and instead performing other trickery).

The test will be to determine if MIDI port to MIDI port inside the iOS device is also throttled. At least on a Mac running 10.6.8 this is not the case - MIDI port to MIDI port seems to run full bore. My guess is there is some sort of magic happening in the conversion from iOS to USB.

Only more time will tell...

But first I'd like to talk about what I think is going on with iOS and MIDI.

First off, I believe that the ubiquitous 30-pin Apple USB connector is filled with magic. In particular there is a "chip" of some sort that resides on one end or the other of the 30-pin that does a lot of magical things for connecting devices. It is said that there is a patent involved and that anyone wanting to create a cable that is plug-compatible with the iOS devices must use that patented or licensed technology.

There is basically a single USB 2.0 output channel on a given iOS device available via the 30-pin connector. USB is a high-speed (500 Mb) frame-based architecture and requires two pins on the 30-pin (see this). Since MIDI output is basically serial output the 30-pin has to share the USB pins and hence the USB.

So what Apple appears to have done was make the iOS devices "look" for MIDI class-compliant devices on the USB line via the Camera Connection kit, if it finds one then it talks to it.

(Note that the Camera Connection Kit only works on iPad at this time.)

However, the USB coming out of the iOS device does not seem to work in the typical USB-daisy-chain mode where you can have multiple devices on a USB line (at least I have not been able to get it to work that way though more testing is required here). iOS also wants to power the MIDI USB device and, with its battery situation tightly controlled by Apple, the device cannot draw too much power or its rejected.

Next up there is the Apple iOS software talking to the USB port. The USB line is limited by the iOS drivers available. The key is here is "class compliant" - if your MIDI device is "class compliant" and draws a minimum of power - it works. Ditto for various audio interfaces as documented here (I did not try this myself but it makes sense that "class compliant" audio would work as well). So if some USB device is not known to iOS then it simply isn't recognized or processed. Add to this the fact that you cannot load drivers into iOS and you end up quite limited.

So the bottom line is that if you want MIDI out of an iOS device you have to use either 1) a Camera Connection Kit on iPad, 2) an iConnectMIDI on any iOS device, or 3) your own solution.

All this leads to something I had hoped to use to solve this: dockStubz from cableJive.

dockStubz is a 30-pin pass through with a mini USB port on the side. My hope was that MIDI devices connected through the dockStubz via the Camera Connector would work and I could run the debugger.

The device has its own male 30-pin on one end and a female 30-pin on the other.

The mini USB port is on the side.

It connects to the iPhone or iPad and the USB cable works just as if you were using a standard out-of-the-box Apple 30-pin to USB.

It works in XCode just fine. There's only one rub. You cannot plug anything else into the 30-pin and have the USB connection work. So installing the Camera Connection Kit disables and disconnects the mini USB.

Rats! Some much for believing internet posts about debugging MIDI on the iPad.

Though now that I have done my research I can see why it doesn't work. The mini-USB is physically connected to the iOS USB pins on the 30-pin so long as nothing is plugged into the 30-pin. Plugging in another device breaks that connection.

The other thing that seems to be happening is that when a MIDI device is attached there is some form of throttling going on to ensure that the attached MIDI device is not overrun. I found this out when a bug in my code had me dumping MIDI CC messages out in a near-tight-loop.

Running these out via my own USB line happens at full USB speed and no throttling occurs (however I am not going through the iOS MIDI driver and instead performing other trickery).

The test will be to determine if MIDI port to MIDI port inside the iOS device is also throttled. At least on a Mac running 10.6.8 this is not the case - MIDI port to MIDI port seems to run full bore. My guess is there is some sort of magic happening in the conversion from iOS to USB.

Only more time will tell...

Subscribe to:

Posts (Atom)